In the previous installment of this story, I have learned that the company somehow managed to fill 200GB of free space on the network shares, overnight. I was more baffled than surprised, as this sort of thing was not new. Our company was known to go on huge data digitization binges without ever bothering to tell the IT about it, or purchase appropriate hardware to store it. In fact, most of such projects were closely guarded secrets, hidden away from the IT because the people who came up with them did not want to be blamed for additional tech related expenses. I got my buddy Larry up to speed on this case, and gave him a mission:

“I need you to use your contacts upstairs to get some intel for us. See if the bright heads over there got any new genius ideas like the scanning project.”

This was the kind of task that required some finesse, charm and social agility. It was a delicate social engineering hack that warranted a light touch. Larry was about as sneaky as a beached walrus, and as socially agile as a wounded elephant in a china shop. He was perfect for the job. His investigation would be brash, abrasive, accusatory and it would instill fear in the legions of the luserati. He would be the bad cop, to my… Lawful evil, but less threatening cop. Or something like that. Someone would eventually squeal under the pressure, or call me with the information hoping I can shield him/her from the wrath of Larry.

While my trusty minion went to ruffle up some feathers, and throw his weight around I decided to investigate the file shares themselves. My plan was to identify large folders, and ask Jeremy why they grew so big, and/or if they can be archived to a different share to make space. Thankfully I was running linux so this would be somewhat easy. I mounted the problem directory as a samba share, then did:

du -skh *

If you are Unix illiterate, this command prints out disk usage stats. The -s makes it print out only top level directories and files, but recuses into them to calculate the size, while -h makes it display the sizes in human readable format (ie. KB, MB an GB rather than in raw bytes). The result of this command was eye opening. Most of the folders on the drive were tiny. Only few took more than a gig of storage. There was nothing outrageously big there, except one entry:

257G DfsrPrivate

DfsrPrivate is a hidden system folder created and maintained by the Micrsoft DFS Replication Service. Without getting into to much of technical explanation, this service keeps file shares on different servers in sync with each other. We set up most of our file sharing servers this way as a means for rapid disaster recovery – always in working pairs, and if one of them fails, you can immediately fail over to the second one while you gut and restore the first.

The DfsrPrivate folder is used for staging the files that are to be replicated, and for storing copies of files on conflict. That conflict folder, turned out the be the actual culprit. Normally, the contents of DfsrPrivate\ConflictAndDeleted are supposed to be kept under 600MB in size. Every once in a while though, the DFSR service decides to ignore the quota and starts dumping huge amounts of data there, without ever deleting anything.

Quick google search revealed that one of the Microsoft Technet blog posts has described the exact same problem I was having and outlined a solution. In case that blog ever goes down here is what you do.

First run the following command:

WMIC.EXE /namespace:\\root\microsoftdfs path dfsrreplicatedfolderconfig get replicatedfolderguid,replicatedfoldername

Yes, this is Windows administration, and we are using a shell. Is your mind blown yet? Anyways, the output of the above should give you a GUID’s for all the network shares you have on the affected server. If you only have one share, then you will get one entry that will look something like: 70bebd41-d5ae-4524-b7df-4eadb89e511e. If you have more shares, make sure you pick the right one, and copy the GUID.

Then you run the following command:

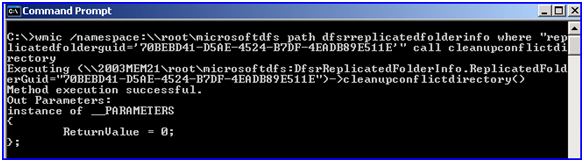

WMIC.EXE /namespace:\\root\microsoftdfs path dfsrreplicatedfolderinfo where "replicatedfolderguid='70bebd41-d5ae-4524-b7df-4eadb89e511e'" call cleanupconflictdirectory

Make sue you substitute the GUID I posted with the one that was generated for your share, otherwise this won’t work. The output will actually look like the script bugged out and dumped a weird error message like this:

This is actually what you want to see. It means it’s working. Once you do it, you wait a few minutes and check your DfsrPrivate\ConflictAndDeleted folder. It should be either empty, or significantly reduced in size. If it’s not you can go to Plan B which is manual deletion.

I run through this solution, run the WMIC scripts and get virtually no results. Plan B it is then. In case you didn’t know, the B in Plan B stands for “Brute Force” or “Brace Yourself”. I take a deep breath, kill the DFSR service, and then manually delete EVERY-FUCKING-THING in that folder. I restart the service and all is well. Replication continues as normal, quota is once again respected, and the users have more than 250 GB to fill before we have a storage problem again. We are back on schedule for Peak Storage 2014. Crisis has been temporarily averted. Now it’s back in it’s place – hanging above our head along with 113 other critical problems that management refuses to address until they start threatening to shut down the company, or interfere with the browsing of Facebook.

Few hours later, I get the following email:

Luke, buddy! I hear there were some problems with downloading or something. Larry was here in Marketing asking all kins of weird questions about network sharewares and what not. It made me nervous. I hope I don’t get in trouble for this, but about a week ago I downloaded my iTunes music onto the G: drive on my computer (that’s the one with lots of free space) cause I like to listen to my music as I work. That’s ok, right? It’s all legit stuff I paid for, and it’s on my computer so it should not matter. I didn’t tell Larry cause he would probably write me up or something. Let me know if I could get in trouble for this. I put the music under In Process\Marketing\Legal cause I knew no one would ever look there.

Apparently my Larry “the bad cop” gambit has worked like a charm, and spooked at least one dude who was making unauthorized use of the network resources to store his pirated music. My response was along the lines of:

No worries, I got your back – I deleted all that music so that you don’t get in trouble. Make sure you don’t bring any more music on the G:, H: or I: drives because those are shared network resources and it will get you in trouble. I promise not to tell Larry.

Email CC’d to Larry and that guy’s supervisor of course. Pity that his collection was less than a gig – I was hoping for more space savings.

The moral of the story: if something eats hundreds of gigabytes of storage over the weekend, don’t automatically blame the users. Chances are it’s shitty Microsoft service instead. Actually, scratch that – blame the users anyway. They deserve it.

I assume you know the complete BOFH series by heart ;)

Ha! I don’t have to worry about shitty Windows services filling up the shares I maintain. Then again, my shares aren’t so much shares as archives and rather than being a terabyte in size, they’re closer to 30 petabytes. Oh, and even saying the word “delete” to my users can lead to firings.

@ ths:

Actually no. I never read the whole series – only isolated bits here and there. :P

@ astine:

Holy crap, 30 petabytes? That’s a lot of space! Are you using sriped RAID or something? I’m fairly sure there are no petabyte drives out there.

We have RAID devices sure. Actually, the bulk of the data is stored on robotic tape systems which use SAN devices as a front end cache so that we can fake a proper filesystem. I’m at the US Library of Congress, in case you’re wondering why we need so much storage.

@ astine:

Robotic tape? Like this: http://www.youtube.com/watch?v=2efhrCxI4J0

previous installment link at the top is wrong (room 404), should point here.